On March 7, 2024, at the TDX event in San Francisco, Pharos founder and CEO Nikita Prokopev joined Matt Kaufman, founder and CEO of Mamba Merge, for a debate about the rise of AI and the impact on developers and admins. This lightly edited transcription of the video includes the slides that were used in the presentation.

Nikita Prokopev:

Hi everybody. Thanks for joining us bright and early. Happy to see everybody here today. First, we’ll get some introductions out of the way and we'll dive right into it.

I'm Nikita Prokopev, founder and head of product at Pharos AI. And today helping me as well is Mr. Matt Kaufman.

Matt Kaufman:

Thanks, Nikita. I'm Matt Kaufman, founder and CEO of Mamba Merge.

Nikita:

Just to set the stage real quick: Matt and I are both senior engineers. I've been at it since ’06. Matt, how about you?

Matt:

I started earlier. ’02.

Nikita:

Yeah, way, way too long. But it's been a fun journey.

What we're going to talk about today are some points about AI that you may not have heard of, but I think these are going to come up more and more as we go into the future.

Some of these are a little bit too far into the future, but with the way that AI is developing, I think we're going to come across some sooner rather than later.

How about some light humor?

So, Matt and I, we were preparing for this talk, and we figured we'd throw in some AI jokes. This is generated by AI and these are kind of fun. I mean, not something that a standup comedian would use, but they're decent, you know. Made me chuckle at times.

I don't know if you guys have done your morning yoga, but AI has. And here's what it looks like:

So, you know, something new.

All right, let's move on to point number one of our discussion today. So, this point here is something that I thought of recently. I'm involved in—heavily involved in—the development of the AI within our own product, Pharos. And as I was doing that work, it occurred to me, are we too much in love with AI?

As a senior engineer, I find AI super helpful. You all have seen the session here on Einstein GPT and what it can do for you. Code generation. It's super crazy. Matt, how about you? Are you leveraging AI today for your day-to-day?

Matt:

I do use it. Usually, it's when I'm lazy and I'm like, oh, I gotta work on something and I'm procrastinating. I will use AI for that.

It's always stuff that I know how to do already. Or I could figure out how to do it and I just don't want to bother. So, it's more of a starter. It's not replacing my job. It's not replacing any employee's job because I wouldn't task employees with that. It's really just getting me to do my job a little faster.

Nikita:

I'm kind of in the same boat. You need a batch job drafted up, write some unit tests, get anonymous apex out there, seed some data in the sandbox. You know, all these things, you used to have to do it from scratch. Now, you can just code-gen them. As I was doing this, I was thinking, hey, you know, this is the kind of task that you would start doing when you're a junior.

By the way, from the audience here, anybody been in the ecosystem for a year or two? A couple of people. Right. So as you're just starting out, this is the kind of stuff you work on. You may be asked to write a trigger or write a batch job, you know; write some unit tests, something simple. Nobody's going to bring you into a project and get you started on something complex, right? You have to learn by doing, and you have to start with the basics.

What’s happening now is that these basics are not going to be done by people anymore, because AI is quite good at them. So how are the juniors, who are our up-and-coming talents, how are they going to come up the ranks if they have no ability to learn or will not have the ability to learn soon? So that's the question that's been bothering me. Matt, What's your take?

Matt:

Yeah. So I've thought about this a lot as we were preparing for this talk. And actually, I was at a Heroku event the other night and they said something really interesting.

They were saying that junior developers are actually using AI more because it allows them to figure out what they need to do without having to go talk to a more senior person or a mentor. So it's not replacing the junior folks. It's just we're kind of automating the training of them. So in a way, the AI is helping us and not taking away the job.

For me, I think that no matter what, we're going to need someone to do some work. And there'll always be someone who has to review that work.

So in a way, the juniors now become seniors. Their junior is the AI, right? Right. And then maybe we'll have an architect that looks over the whole thing. Typically, when I ask AI for something, I'm asking for a very specific small unit of code. A unit test is a good example. A small function. I actually tested this out. I asked ChatGPT to write a JavaScript function that will find one object in an array of objects, where a value matches a string that I put in.

So, for instance, we had a list of people as the contacts, and I was looking for where the first name is equal to “Matt.” I know how to do it. I've done it before. I just don't remember how to do it. And so I could either Google it, or I can just ask ChatGPT to do it.

And that's the kind of thing that a junior would do also. They would just say, okay, they can go figure it out. I don't want my junior developer spending three hours figuring out something that they could have Googled. So this is just a faster way of doing it. That's the way I see it.

Nikita:

That's a good point. I really like this idea, that you're not embarrassed to ask AI these questions. If you go and ask your mentor, they might think that “this person should know these things,” right? “What are they doing, talking to me about it.”

Matt:

“You've been here six months and you don’t know how to do this yet?”

Nikita:

Yeah, yeah. “You don’t know how to do a basic batch job? Come on, man.”

Alright, well, here's another analogy that I came up with. How many of you in the audience have heard of assembly language? For those of you who don't know, back in the 1980s, that was the hot thing. If you wanted to write anything halfway decent, you had to use assembly language. And that thing is not meant for humans. Like that stuff, when you look at it, it's totally different from the language that we see today. It's very low level. It pushes data between registries and crunches numbers, and that's what people had to do.

But nowadays you can't even find an assembly programmer unless you go out to like 3D graphics or some niche device development. That's maybe where you'll find it. Today people don't even learn from that level. They launch right into higher level concepts right away. So I think we're going to see something like that where, you know, the prompting is going to be the new thing, right?

Matt:

Yeah. So the other thing is we use a lot of terms. We say software engineer, we say developer, we say coders. We say app builder. But there's also the term programmer. And so if you take it really down low. I studied linguistics. I tend to be hypercritical of a lot of words. A programmer tells the computer what to do, programing it to do, step one, step two, step three.

The prompt stuff is the same thing. We're just programming the AI what to do by speaking or writing words instead of writing actual code. So I really don't see the job changing. It's just the tool that's changing. An analogy would be like a stenographer. At some point people were taking notes and writing down in, I guess, printing or cursive or, you know, tablets, chipping away…

Nikita:

Ancient monks copying manuscripts!

Matt:

Yeah, yeah, manuscripts. Right? You know, whatever it was, there was a very slow process to record words. And then we got, okay, we'll do shorthand. Then they got typewriters and then they got faster typewriters. And now it's just, oh, we don't have here [at TDX], but you have the key notes [getting transcribed and projected in real time]. It's doing it by itself.

So we still do the same thing. We're just using new tools. I see AI as a tool that programmers will use to program, but that means that the programmers still exist. They're still doing the program, right? We just have a new tool to help.

Nikita:

One thing I'll throw at that is, you might get good at prompting one LLM. And then that's all well and good, and you will learn. And you know how to ask it in a certain way.

But then another LLM comes out, which is going to be happening more and more often. And then all your [older] prompts are garbage. I don't know if you guys remember, but when GPT 3.5 was around and then 4.0 came out, we had to throw away all of our prompts.

And the way you verify a prompt, if you think about that, it's a very, very human task. And AI cannot verify its own correctness. Not yet. So, as you're switching to a new LLM, and you're a junior and you know how to ask it a certain way– and then you’re prompting a new version, all of a sudden it doesn't work anymore, and you don't have the skills to know whether what it's outputting is, in fact, correct.

Matt:

Yeah. Another thing you can think about is like front-end engineers versus back-end engineers. I probably could ask ChatGPT to write some JavaScript code because I've done that, but I don't even know how to begin to ask it to make these AI images – and then to get the images to be correct. We're going to show you a lot of examples of these bad images. So that's somebody who's probably not a front-end prompt engineer. Right? They're not--they don't know how to ask it.

So, I think the buzzwords on our resumes in the future are going to be things like front-end prompt engineer, back-end prompt engineer, and then, which languages and which models you have and you're going to be specialized in.

Nikita:

Yeah. Prompting, like, right now it’s [all-in-one] prompting. So in the future we might see very, very niche areas of prompting. And that's going to be your path.

Matt:

Yeah.

Nikita:

All right. Well this is interesting, but let's move on to our next point here. But before we do, I know that it's been raining a lot recently, and, you know, you got to hold on to that umbrella.

Matt:

Yeah. So this is a back-end engineer. Yeah. For sure.

Nikita:

All right, point number two.

So this is an interesting one because, as an engineer, it's a lot easier to write code from scratch because you know exactly what's going on. You know exactly how you're going to do it. Matt, would you say that modifying existing code is harder?

Matt:

Yeah. For sure. If you were to show me code right now, I'm like, “it's terrible.” Doesn't matter if I wrote it three months ago. Right? You look at someone else's code or your own and, you’re like, this is really bad. It's rare that I say, oh, this is beautiful. It's a masterpiece. I couldn't have done it any better.

But if I were to start from scratch writing it, at that moment that I'm done I think, “wow, I did it! It’s so great!” So, you know, it's kind of weird—it’s an experience. When you write new code, it seems easier. You're more satisfied. When you look at existing code, it’s just not fun.

Nikita:

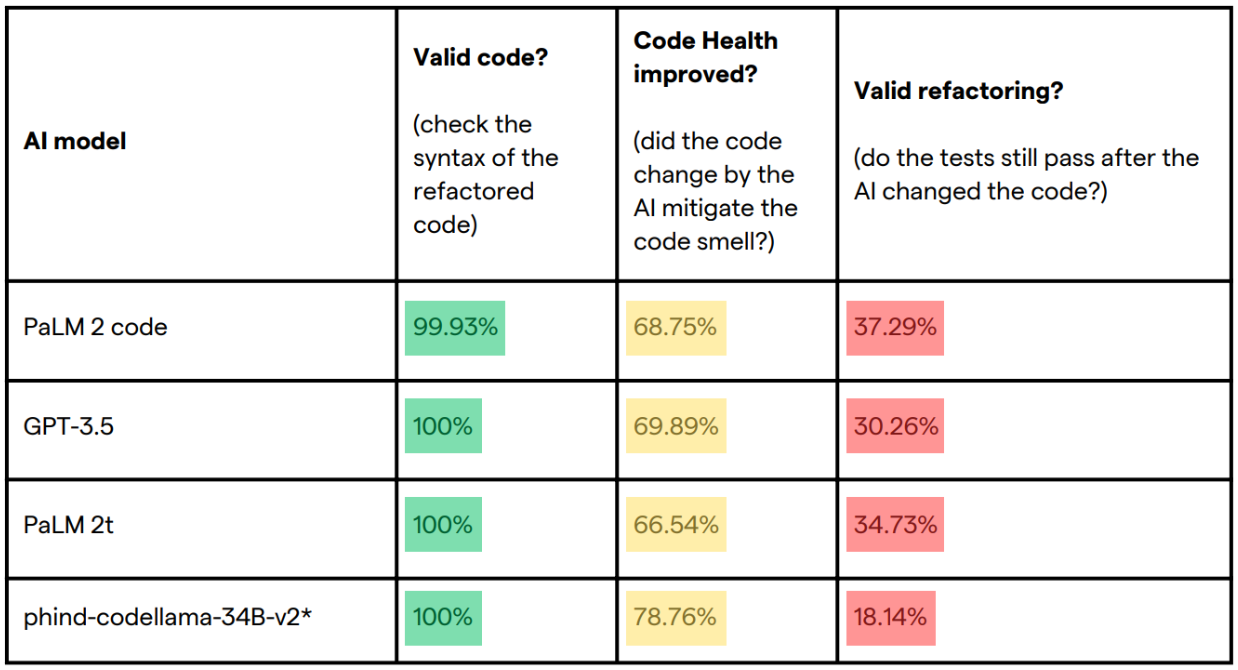

And, let alone code that's not the greatest quality, that's just the worst nightmare of everybody. And that's what we have to do every day, right? This is--when you come up to a project, you don't write things from scratch. You are stuck with the baggage. So there's a little study over here in this article, I think that's a white paper. And for those of you that can't quite see the table, I don’t blame you, I'm just going to summarize it for you.

So what they did was they put different LLMs against the same code base, and they wanted to measure how well AI performs at refactoring. And by refactoring, we mean that we're going to keep the existing functionality, but we're going to try to improve the code quality.

And so what happened here was, the left-most column-- where it's all green--that means the code is valid, right? So AI generated valid code, it compiled, which is awesome right? It’s working code, working. Then column number two says, “did code quality actually improve?” And it did. So the lowest score was 68% and the high score was 78%. So it did improve code quality quite a bit, which is what we want. Right? That's the point of refactoring.

But the problem starts in column number three, where it's all red. And the best score out of that bunch is 37 and 18 is the worst. So 18% of the functionality worked correctly in the worst case, and 37% worked in the best case.

And that's just not acceptable, if you think about it. You prompt an LLM to do something and it does it for you. It spits out a bunch of code and--you didn't write it, right? So, it's not like you wrote it from scratch and you understand it, you can fix those bugs. But you still have to fix those bugs.

Matt:

So that's job security!

Nikita:

Right? So, I would argue at that point, then, why use AI for this at all? AI is just plain not good at this. So Matt, what do you think? Is it going to get better? Is it going to stay the same, or are we not going to be using AI for this very big pain point?

Matt:

So I tested this also when I had asked AI, ChatGPT, to write that function, it did it in -- well, let's just say, old-school JavaScript. So I recognized it as just a For loop. I know there's another way to do it. I could write this code if I spent the time to do it. So then I had to revise my problem and say, “Write it with ES6,” and it rewrote it. And I could keep asking over and over to get these small improvements. But at some point then I might as well have just done it myself.

Nikita:

Right.

Matt:

So, I think it will get better. I think they’ll develop languages where ideally it would go straight to the latest version of JavaScript. Why are we even looking at the old stuff?.

Nikita:

Right.

Matt:

But that's going to take time. Or you have to get better at prompts, where you're basically writing a really robust prompt ahead of time. And then you ask this one small question. And you start refining.

Nikita:

I guess the other thing that we could do is, AI is great at summarizing. We all know that. So feed it some legacy code and have it try and summarize it for you. That'll get you started in the refactoring process. You have no idea what [the old code} is doing. There's no documentation. That's a great way to go about it. If you have some knowledge at this point. Yeah, maybe not rely on the generated code, but at least provide some structure and start with that.

The other thing I think that's going to happen is, I think AI is going to get better at this and it will improve quite quickly. But there's still going to be like-- if you look at that right-most column, 37% is horrible. Even 90% is really bad. Because that means there's a lot of bugs still in there.

And so, are we going to see more emphasis on testing? As in, QA or maybe runtime monitoring is going to be more of a thing because, hey, you’ve got to catch those errors in production, right? Matt, what do you think?

Matt:

I was talking with a friend about this yesterday. If you use AI to generate unit tests right now for Apex, it doesn't do assertions. It just makes sure that your lines of code are passing. And so if everyone's going to do that, then do we even need unit tests? Do we need the 75%? You know, at some point it's like, what's the point? So we're ignoring the spirit of the law. You know, we're following the rule of the law. So it's a huge issue. We either are going to just say, you know what? Forget it, throw it all out the door, or people are going to have to go in and then actually make sure that things are logical and that we're testing the business rules. And not just line 5, line 6, line 7.

Nikita:

Yeah, that's a good point. yeah. Anyway, these are very interesting research papers. So you guys, these slides will be shared. So have a look.

And, before we move on to the next one, a little instructional tutorial for you on how to eat a hamburger. You know…

Matt Kaufman:

So when I first saw this, I obviously noticed the guy not eating the hamburger properly, but this is what really freaked me out, down here. That the... Like the top bun is so much bigger than the bottom bun. This is a bagel over here, I think. There's just so many mistakes in this.

Nikita:

All right. Our last point for the day is actually quite simple.

We all know how LLMs are trained. They're trained on public data. And there's plenty of it. And it's all human-generated at this point. Now, we're going to start seeing AI dump more of its output into the public domain. So guess what? It's going to be used to train our next generation of LLMs. So, there's going to be this dog-fooding thing going on and this article linked over here is talking about this exact thing.

And so, are we going to see a point of diminishing returns where the LLM is just trained on its own output and it gets worse and worse over time? What's going to happen? So one of the things that they're talking about is, the only way to fix that is to periodically infuse the LLM with truly human-generated content. And that raises the question, are we going to have certified human content? Like, certified organic vegetables or something like that? Is that what's going to happen, Matt?

Matt:

Yeah. So I can remember a time when there probably existed a thing called a data analyst or a data scientist, but it wasn't a job that we saw a lot. It seems like it wasn't that long ago. Time moves quickly. I think that this is a job that we're going to start seeing.

So, we're not just going to have the programmers-slash-prompt engineer people. We're going to have this other quality of person who's there to look at, okay, is this good material to train with or is this bad material? And then if it is good, we somehow put some type of certification checkmark, whatever it may be.

It doesn't matter, really, if it was generated by AI or not. I think it's more about the quality. Is it good?

Nikita:

Well yeah, that's true. Also, I think there's going to be a premium price on human-generated content in the future because a lot of LLM vendors are going to need it to make sure their LLMs don't collapse. That’s actually a term, “model collapse.” So they're going to pay a premium price for that. Your output will be worth a lot more just because you are human and there are people willing to pay for it. So I think that's going to happen.

Matt:

I don't know if Captcha is working on this, but I think those images, instead of saying, ”Find the bicycles,” it should be, “find the images that are human generated, find the images that are AI generated.” And then we are basically crowdsourcing to determine, “is this good or not?”

And maybe it'll even get to words, like, “read this sentence. Does this make sense?” “Is this the definition of a tent? Or a guy camping?”

Nikita:

Imagine training AI on this. We're just going to see stuff straight out of Summer of Love. It’s going to be great.

All right, well, we are at time over here. I just wanted to see if anybody has any questions or wants to add anything. Happy to pass to you.

[Audience question]

Just to repeat the question for everyone, the question is about job security: are we going to have less positions available and how is this going to affect us as a whole?

Matt:

So, obviously we don't know, right? If you go to the extreme and you look at the steno pool at old offices, those jobs are all gone for sure. I assume those people would still be in the workforce, but doing different work. It's probably going to be some type of ebbs and flows, ups and downs.

Nikita:

Yeah. And if you think about it, our jobs are not just writing code or, you know, building flows. We're doing it for the humans, for the other humans. And who's going to interact with the other humans? They're going to want to interact with humans, too, right? So I think that human element is going to be more and more important in whatever role that could be conceived for the future, whether you’re prompting or whether you’re coding or what not.

Matt:

Yeah, my guess is the more time you spend in meetings for your job— not because you want to be in the meetings, but because of the nature of the job—that probably means that you're going to have a job. Whereas, if you're on a factory floor just doing the same thing over and over the whole time, that's more likely to get, you know, taken away through automation.

Additional Resources:

- Salesforce and AI: Trust, But Verify

- Refactoring vs Refuctoring: Advancing the state of AI-automated code improvements

- The AI feedback loop: Researchers warn of ‘model collapse’ as AI trains on AI-generated content

![]()